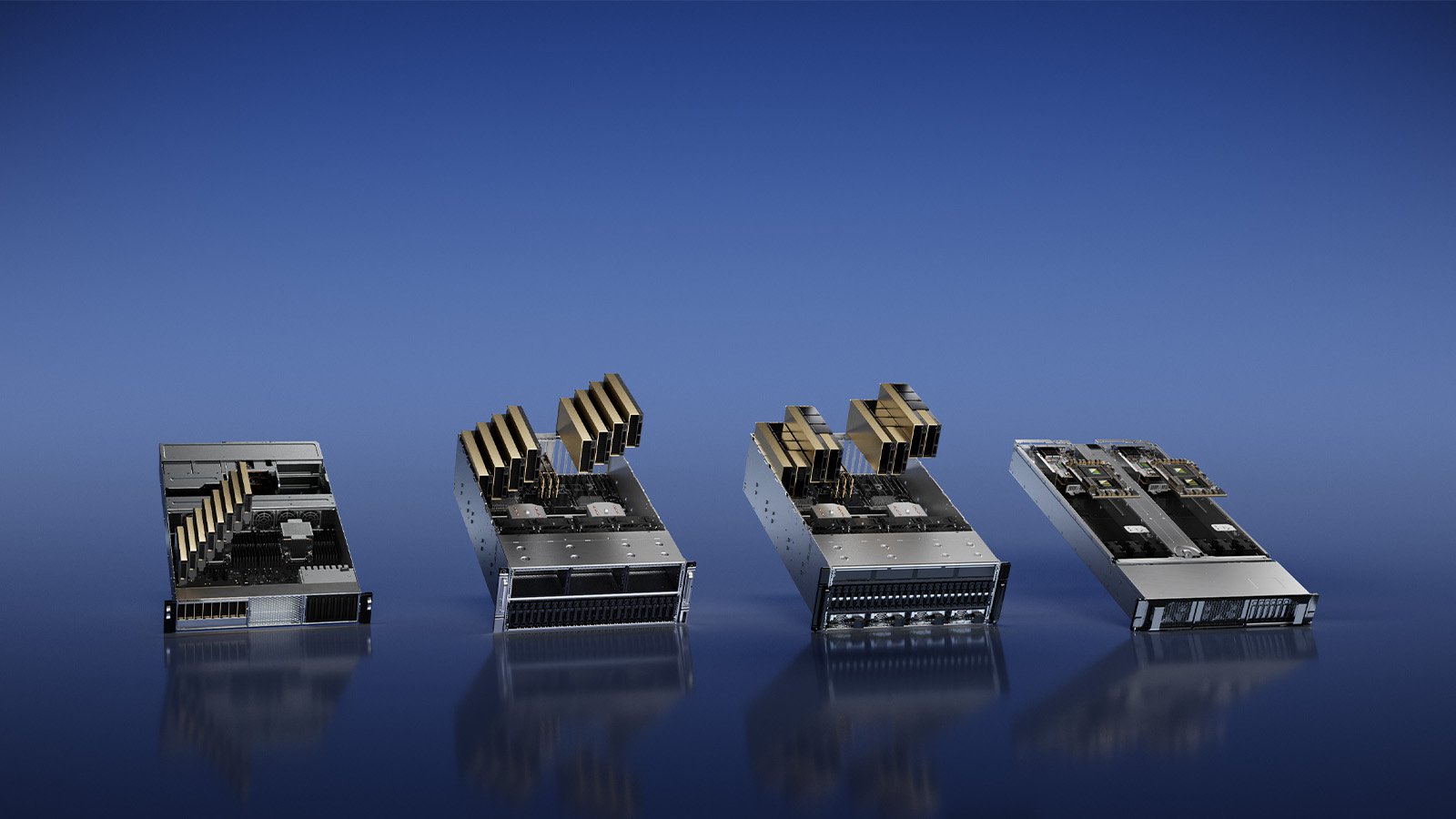

Cloudflare, Inc., the global leader in connectivity cloud solutions, announced that its global network will deploy NVIDIA GPUs at the edge combined with NVIDIA Ethernet switches, putting AI inference compute power close to users around the globe. With this move, businesses can now access Cloudflare’s global data center network for affordable and secure AI inference to power leading-edge applications anywhere. This strategic move also incorporates NVIDIA’s comprehensive inference software suite, featuring NVIDIA TensorRT-LLM and NVIDIA Triton Inference server, to further enhance the performance of AI applications, including advanced language models.

Effective immediately, Cloudflare opens the doors for all its customers to harness local computational capabilities, enabling the delivery of AI-powered applications and services through a high-speed and compliance-oriented infrastructure. This momentous announcement empowers organizations to scale AI workloads efficiently and pay for computing resources as needed, all through the Cloudflare platform.

AI inference, the bedrock of user experiences in the AI realm, is primed to take center stage in AI workloads. The demand for GPUs within organizations is surging. Cloudflare, boasting data centers in over 300 global locations, is uniquely positioned to deliver rapid user experiences while adhering to global compliance regulations.

Cloudflare is poised to make AI model deployment accessible to organizations worldwide, backed by the formidable combination of NVIDIA GPUs, networking prowess, and cutting-edge inference software. This offering relieves organizations of the burdens associated with managing, scaling, optimizing, and securing AI deployments.

“AI inference on a network is going to be the sweet spot for many businesses: private data stays close to wherever users physically are, while still being extremely cost-effective to run because it’s nearby,” said Matthew Prince, CEO and co-founder, Cloudflare. “With NVIDIA’s state-of-the-art GPU technology on our global network, we’re making AI inference — that was previously out of reach for many customers — accessible and affordable globally.”

Ian Buck, Vice President of Hyperscale and HPC at NVIDIA, commented, “NVIDIA’s inference platform is critical to powering the next wave of generative AI applications. With NVIDIA GPUs and NVIDIA AI software available on Cloudflare, businesses will be able to create responsive new customer experiences and drive innovation across every industry.”

With the deployment of NVIDIA GPUs across its global edge network, Cloudflare is delivering:

- Low-latency generative AI experiences to end users, with NVIDIA GPUs accessible for inference tasks in over 100 cities by the end of 2023 and nearly ubiquitous availability across Cloudflare’s network by the end of 2024.

- Access to computational power in close proximity to customer data, facilitating proactive compliance and regulatory adherence.

- Cost-effective, pay-as-you-go computational resources at scale, ensuring that every business can tap into the latest AI innovations without the need for substantial upfront investments in GPU reserves that may go underutilized.